Is Google Search Console are worthy replacement for keywords lost in GA?

Keywords not provided: three words that have been a thorn in the side of SEO professionals since the beginning of 2011.

Back then, it was only a small thorn. But since then the thorn has grown, and now not provided keyword data accounts for 99% of all Google Analytics keywords. And, with it, businesses lost massive visibility.

Deep insight was lost over the years.

But since then data processing has progressed exponentially.

Processing the information would have also worked back in 2011.

The limiting factor was handling extremely large amounts of data.

Simply uploading the information back into our customers’ accounts would take 13 hours on AWS.

And it was prohibitively expensive.

Organic keywords Google Analytics

Keyword data belongs to webmasters, and that’s how it would be in an SEO utopia.

However, with the arrival of more sophisticated data analysis, website owners now have a chance to reclaim coveted organic keywords not provided any longer by Google.

A small team of world-class experts, mostly from the Fraunhofer Institute of Technology, know how to make sense of large amounts of data.

With access to vast amounts of data points from browser extensions, the team can tackle this as a data science problem.

By fetching this enormous data set from all users’ URLs there is a way to reverse engineer the keywords a page ranks for.

You next connect rank monitoring services, SEO tools and crawlers.

And “cognitive services” like Google Trends, Bing Cognitive Services and Wikipedia’s API.

Then integrate Google Search Console.

Google Analytics organic search keyword not provided

Using maths to retrieve hidden keywords takes a multipronged approach.

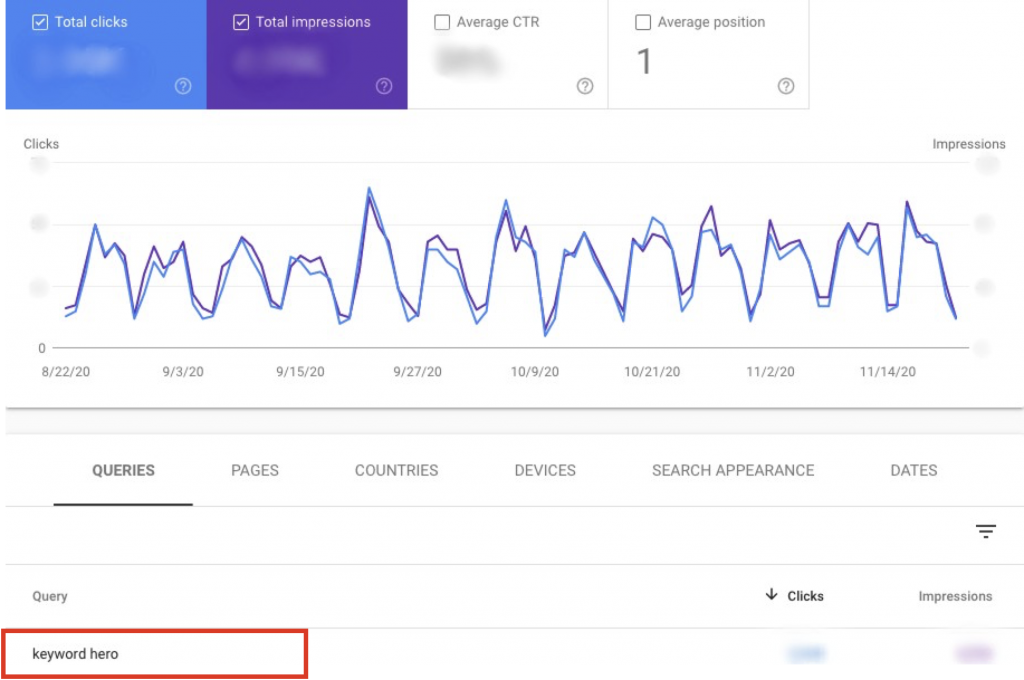

Google Search Console and Google Analytics are often used interchangeably.

Yet they serve different purposes.

Performance data from GSC is a measure of what’s happening on Google itself, and not necessarily what is happening on your site.

However, as you introduce more specificity into how you review a website, the precision of the data reported in Google Search Console increases.

Hence, if you add hundreds of subdirectories to GSC, the increase in data precision can be valuable to draw the curtain on analytics not provided

Google Search Console and Google Analytics

Each of these valuable Google tools serves a different purpose.

Google Analytics is user-oriented.

It shows you who visits and interacts with the main content on your website.

Google Search Console is search-engine focused.

It shows site owners how to improve visibility and presence in the SERPs.

As such, both tools provide different metrics, with Google Analytics favoring clicks and Google Search Console prioritizing impressions.

This is why we use both along with 7 other data sources.

Can I not just use Google Search Console to find keywords not provided?

Google Search Console is useful for some light-touch insight into how your website is performing on search, but nothing like the on-site behavioral data which Google Analytics used to provide.

Google only takes a measurement when the results page is used.

If a results page is not used during the period, no data is collected.

Structurally, GSC is closer to Google Analytics than an advanced SEO measurement tool like Keyword Hero.

GSC does not enable a valid comparison between mobile and desktop data.

That is because of the significantly different user behavior.

For example, a smartphone user might click on the second or third page of SERP less frequently than desktop users.

Since the GSC data is based on this user behavior it doesn’t give the complete picture.

Keyword Hero ensures that desktop and mobile can be compared with a high degree of accuracy.

Serp ranking

Ranking distribution is a key feature of GSC.

Yet only pages that appear on page one of the Serp are considered.

This is because GSC data is only collected when a searcher accesses the search page.

Hence, the ranking distribution can not be accurately determined.

There are too few users accessing the second or third page of results to provide a reliable evaluation.

Data transparency

Search Console data has been consolidated since 2019 based on the canonical URL given in an HTML page.

Thus Google is layering over the data for which URL was displayed in the search results.

For larger websites, this can create problems.

It means that configuration errors, redirects, AMP conversions, etc can no longer be uniquely tracked.

Keyword data

The GSC API counts and delivers keywords as a combination of keyword / term, URL, device and country.

Terms like keywords not provided are delivered as 130 different combinations.

Keyword Hero counts each keyword once.

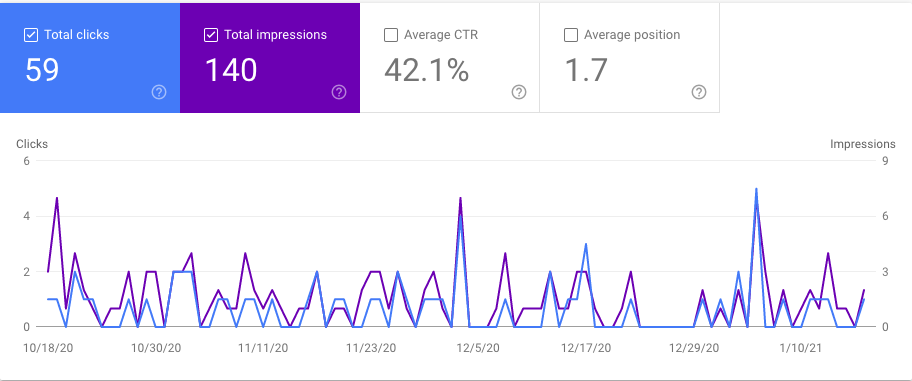

In addition, the average position on GSC can be confusing.

The position information often has decimal places.

You can see an average position of 1.7 for a keyword.

This decimal-place is based on Google’s method of counting the results.

Google counts the results that contain a link to the target page from top to bottom and then continues with other search result elements.

This counting method makes sense from the perspective of a search engine.

But for digital marketers, it can lead to false assumptions.

If your page ranks no 1 and there is only one knowledge panel in the results as an additional element in which the website also appears, Google will display the placement with the average position of 6 ((pos 1 + pos 11) / 2).

Google Search Console data is not complete

GSC was promoted as a replacement to the data derived from referrer keyword data.

However, this is now filtered by Google for privacy reasons.

Unfortunately, there is no information available from Google on the scope, extent and background to the level of keyword filtering.

It is also unknown whether the filtering changes over time.

Data in GSC is compared to previously available keyword data, which used the referrer-string.

Yet it’s not the same. Data is missing and the rules are unclear.

Nonetheless, GSC will give you a good starting point to see what’s happening on the results page, but you will need other data sources for a well-rounded interpretation.

For each keyword on GSC, you can access data for clicks, impressions, click-through rates (CTRs) and average position.

This gives you a good idea of what the most important organic keywords are for your website.

The problem with this report is that you can only get data for your entire website, not individual pages.

And you can’t map it to individual sessions on Google Analytics.

It doesn’t discern whether your site shows up on page one or page 1000 of Google.

Hence impressions in this report don’t necessarily mean people are clicking through to the page where you appear on Google.

Nonetheless, GSC plays a role, as we dive deeper into keywords not provided.

Going deeper with Keyword Hero

At Keyword Hero, we use both GA and GSC to discover new keywords.

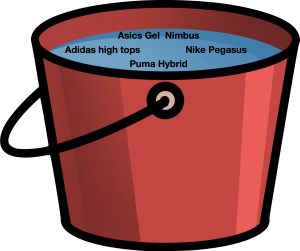

First, we merge GSC data with the combined data from the ‘cognitive services’ and rank monitoring data to compute keywords and the first likelihood.

The result is one big bucket with all possible keywords.

Clustering and classifying keywords not provided

Without clustering and classifying, these keywords would be of little value.

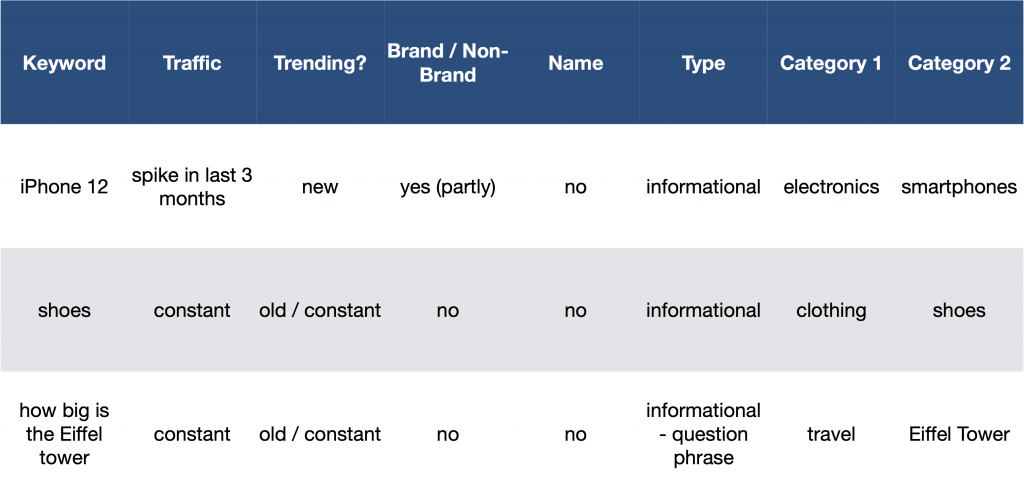

Consequently, the next step involves using external APIs to look for behavior changes in a keyword’s history.

Was there an uptick in traffic in the previous 12 months by mobile users?

Did a spike or dip occur?

Is there a reference article on Wikipedia if so how does it behave?

After we have created a keyword set and checked for potential spikes, we try to understand the keyword.

Is it a brand or a non-brand keyword?

Is it a person / brand name?

This is important for n-gram analysis.

What is n-gram analysis?

We will go through this in more detail in a future article.

For those of you who are new to N-grams think of it as a sequence of words, where one word=one gram.

For instance, take a look at the following example.

- New York is a 2-gram

- Friday the 13th is a 3-gram

- He stood up slowly is a 4-gram

Which of these three N-grams have you seen most frequently?

We can assume that “New York” and “Friday the 13th”.

Conversely “he stood up slowly” is seen much less often.

In essence “he stood up slowly” is an example of an N-gram that does not occur as often in sentences as examples one and two.

Now if we assign a probability to the occurrence of an N-gram or the probability of a word occurring next in a sequence of words, it can be useful.

Why?

First, it can help you to decide which N-grams can be clustered together to form single entities (like “New York” merged into one word, or “fresh food” as one word.

It can also help you make next-word predictions.

Say you have the partial sentence “fresh food”.

It is more likely that the next word is going to be “delivery” or “store” than “for rent”.

We next try to find out if the keyword is transactional: buy Adidas high tops; navigational: find Adidas shop near me or informational: Adidas high tops price.

The result is one big data frame where certain parameters are attributed to the keywords.

After that, we go through six crucial steps.

- we analyze differences in the performance of organic traffic

- calculate the probability of a keyword matching a cluster of sessions

- train the classifications

- compute possible keywords

- check results against hard data and

- adjust classifications based on results

To get the full details for each of these steps please read here.

The result is a certainty threshold for a keyword to match with a session that varies between 80% and 85%.

The certainty level is based on the assumption that 95% of all possible keywords have been found in the first bucket.

The world’s first keyword superhero has solved one of the oldest SEO dilemmas: reclaiming organic search not provided.

In response to the outrage of SEOs Google decided not to leave website operators completely in the dark.

And that’s where Google Search Console comes into play.

After analyzing, clustering, training, computing and classifying Google’s keyword data, webmasters can once again see their keywords in GA.

Google wanted users to see GSC as the natural successor to the referrer field seen in web server log files.

Yet it functions as more of an extension to Google Analytics than a replacement for keywords not provided.